FaceReader 9 Release – Improved Analysis Performance and Possibilities

We have surpassed the previous version again and are very satisfied with the new FaceReader 9 release. FaceReader 9 has improved deep learning based modelling capabilities for higher quality and faster analysis. It features a completely new project analysis module which will make the analysis of your results much easier. There are also some new features and outputs, such as gaze direction, head position, heart rate variability, and operators in the custom expression module.

Technological Advances in FaceReader 9

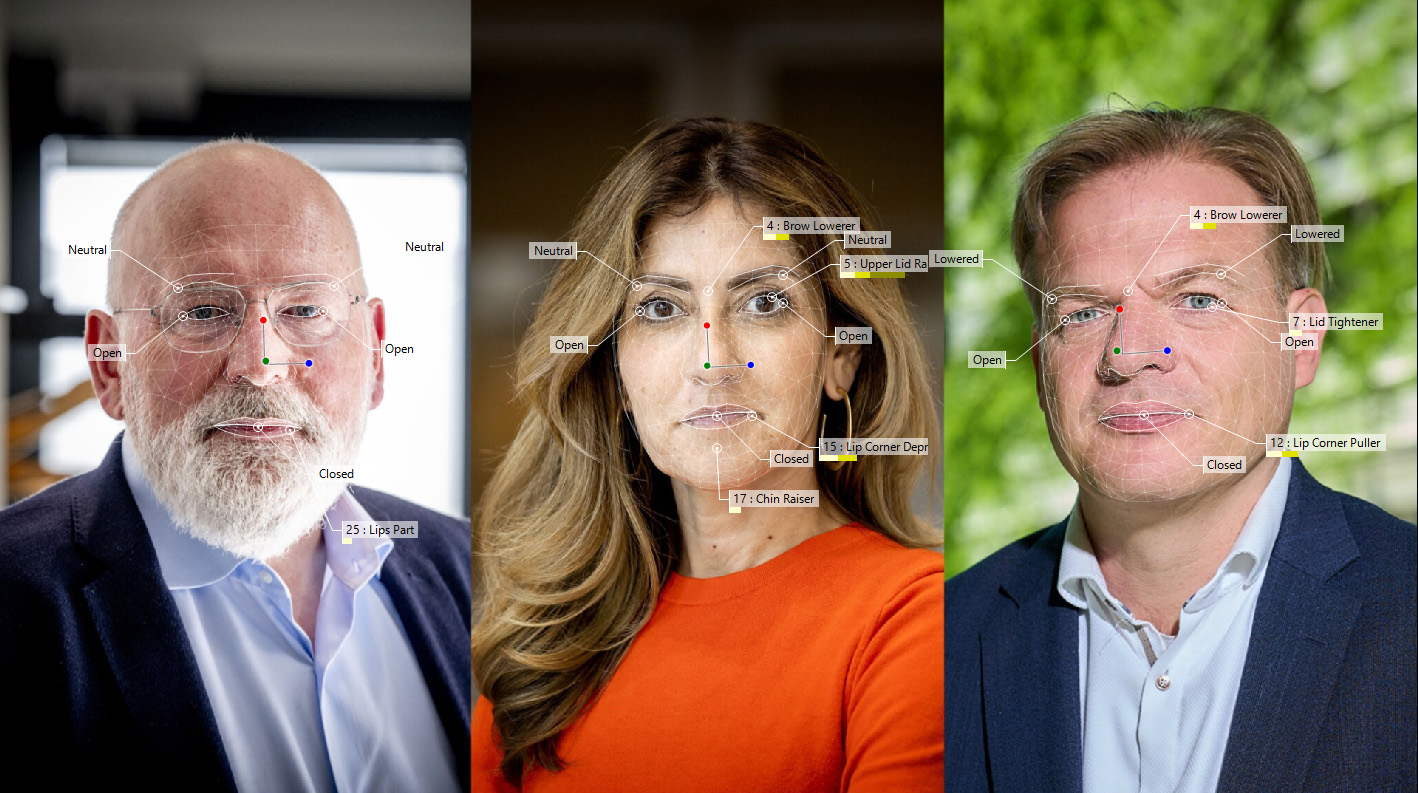

We have introduced a new deep learning based face modeling technique that can now handle bad lighting and different poses much better. We have also retrained our deep neural networks and increased the size of our training sets, which should result in more robust facial expressions and action unit classification (request the Validation White Paper from Noldus here). This can be especially important when collecting data in home environments with FaceReader Online (FaceReader 9 update is coming soon!). Due to further computational optimizations, FaceReader is able to run much faster, giving beyond real-time speeds up to 60fps on regular CPUs.

FaceReader 9 Facilitates the Processing of Research Results

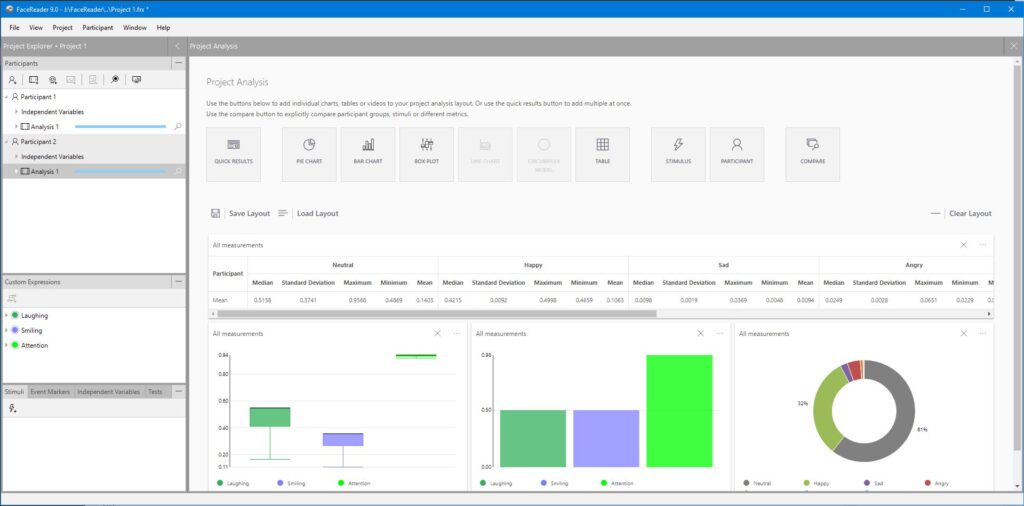

The new project analysis module now makes it possible to do more elaborate processing steps. In the previous version, you could only get the mean basic emotion of one video and then get the mean off all participants. Now, for each data type (e.g. basic emotion, Action Unit, custom expression) one can choose different (Mean, SD, Min, Max, Median) temporal and participant aggregations. This allows you, for example, to investigate the change in emotion by taking the Min and Max value during a certain event. These options make it very easy to create a per participant summary table that you can then use in SPSS or another statistics program.

You can also use this to more specifically analyze activations of Action Units. However, if you know a combination of AUs that is relevant, the custom expression module allows you to study those directly and get the results over time. This module now features a few more building blocks and we have included a few examples such as laughter, smiling, and attention (see video below: light green = attention, blue = smiling, dark green = laughter). In addition, we created a white paper with many tips on how to create these (available via Noldus on request).

More Updates and More Information

These are just some of the developments. The modules Baby FaceReader and remote PPG have also been updated. In addition, FaceReader 9 includes some of the first outcomes of our EyeReader project (on which more information will follow soon). For more information and to purchase FaceReader 9 see the Noldus website.