Reading Faces

Apart from being the channel used to identify other members of the species, the human face provides a number of signals essential for interpersonal communication in our social life. The face houses the speech production center and is used to regulate the conversation by gazing or nodding, and to interpret what is being said by lip reading. It is our direct and naturally preeminent means of communicating and understanding someone’s affective state and intentions solely based on a displayed facial expression is being shown.

The face is a multi-signal sender/receiver, capable of tremendous flexibility and specificity. In turn, automating the analysis of facial signals can be highly beneficial in many different fields such as behavioral science, market research, communication, education, and human-machine interaction.

Face Detection

The first step of our Face Analysis system consist of accurately finding the location and size of faces in arbitrary scenes under varying lighting conditions and complex backgrounds. Face detection, combined with eye detection, gives us a perfect starting point for the following facial modeling and expression analysis.

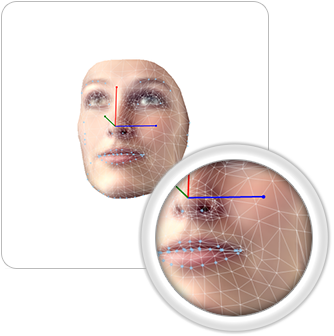

Face Modeling

Since the beginning of the 90s, VicarVision has been developing and fine tuning an advanced 3D face modeling technique. In its current state, the technique is capable of automatically modeling a previously unknown face, in real-time, using a 3D face model with over 500 keypoints. This corresponds to a parametric representation of a face by modeling the geometry of rigid features on the face. This makes the analysis of facial expressions, head pose and gaze detection possible and proves to be useful in a wide range of applications.

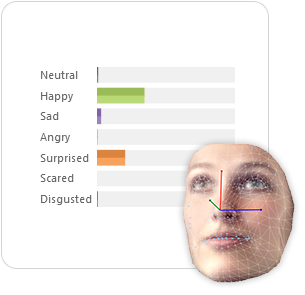

Facial Expression Recognition

Facial expressions play a crucial role in the social communication between people. Our face modeling technique allows the classification of the 6 most prominent universal facial expressions related to the following emotional states: anger, disgust, fear, joy, sadness and surprise plus a neutral state. The occurrence and confidence of these non-verbal communication forms are classified continuously between zero and one.

Action Units

The six basic emotions are only a fraction of the possible facial expressions. A widely used method for describing the activation of the individual facial muscles is the Facial Action Coding System (FACS) [3]. FACS decomposes the face into 46 individual Action Units (AUs). FaceReader can automatically detect the 20 most commonly occuring AUs. Using our 3D face model, local keypoints can be accurately tracked to evaluate the activation of each AU. FaceReader expresses the AU activation in the five-scale intensity (A, B, C, D, E) that is common practice in the FACS community.

Eye Tracking

Eye tracking allows to find the direction where one is looking towards by measuring the position and movement of eyes with respect to the head. Our technology offers a reliable method to track the natural eye movement using the generated facial model and the relative eye location. This tool addresses a wide range of applications in psychology, market research and usability analysis.