AID2BeWell – Project Results of Social Robot Prototype for Older Users

In this ageing society it is important to promote active and healthy ageing. A key technology that could help older adults in their home environment is a robot platform that delivers adaptive personal behaviour change suggestions promoting well-being. To find the right timing and know which interventions are most suitable for a user it is important that the robot can assess their emotions. In our AID2BeWell collaborative AAL project, we investigated user requirements for such a robot platform and developed a prototype that was able to run FaceReader technology. We performed quantitative and qualitative research to assess the needs and requirements and performed two pilot studies. In this blog, we present some of the main findings of our project.

Older users have diverse, but mostly positive attitudes towards a robot interaction

A quantitative online survey was done among three age groups (55-64, 65-74, 75+) from three countries (Austria, Belgium, Netherlands) assuring insights on what would be the most relevant target group. Users on average rated the utility of robots high and did not report high robot anxiety. The findings indicate potential users of a social robot must have a certain affinity for technology or receive support of caretakers or family members. The social robot solution is regarded most beneficial for older age groups who live alone, feel lonely or have minor cognitive or physical impairments. More detailed information on the survey can be found in our deliverables, see also the website: www.aid2bewell.eu

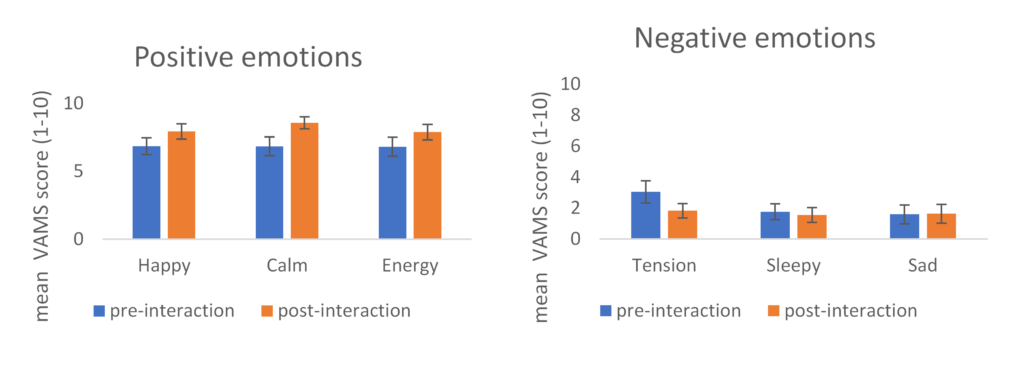

In group and personal interactions users indicated they find personalization of the robot important, such as choosing a name or voice for the robot. The Q.Bo robot was generally liked by participants, but it would be better if it was more dynamic, for example with a moving face instead of only LEDs. In the pilots, one in the lab and the second one unsupervised at home, the robot would start interactions such as an exercise, game, or information about healthy habits. Most participants liked the interactions, they also liked having someone in the house that reacts to you and attracts attention. In addition, after a scripted interaction, participants indicated feeling calmer and less tense. Regarding the phrasing of the recommendations it was important that the user’s autonomy was respected, thus having friendly phrasing and check before making certain suggestions.

Emotion based triggers can be more motivating in an interaction

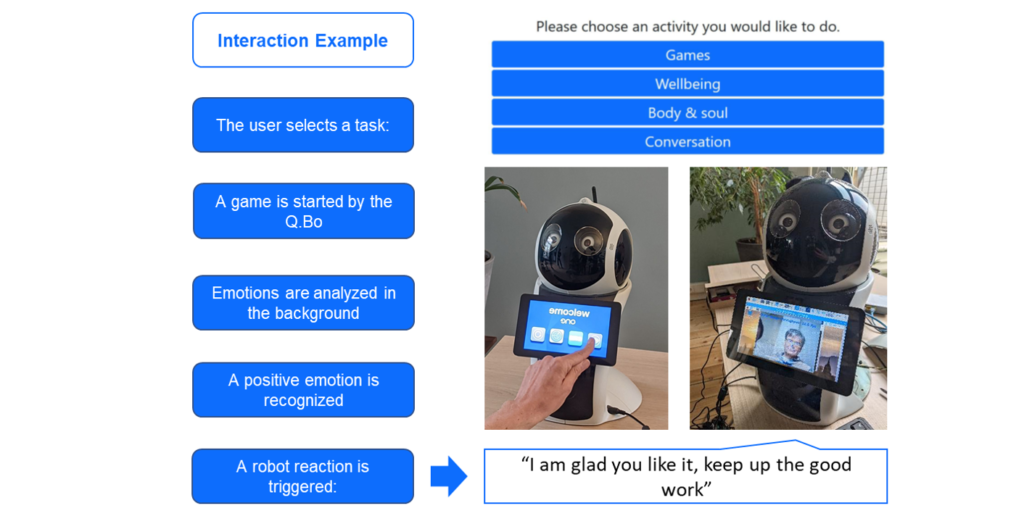

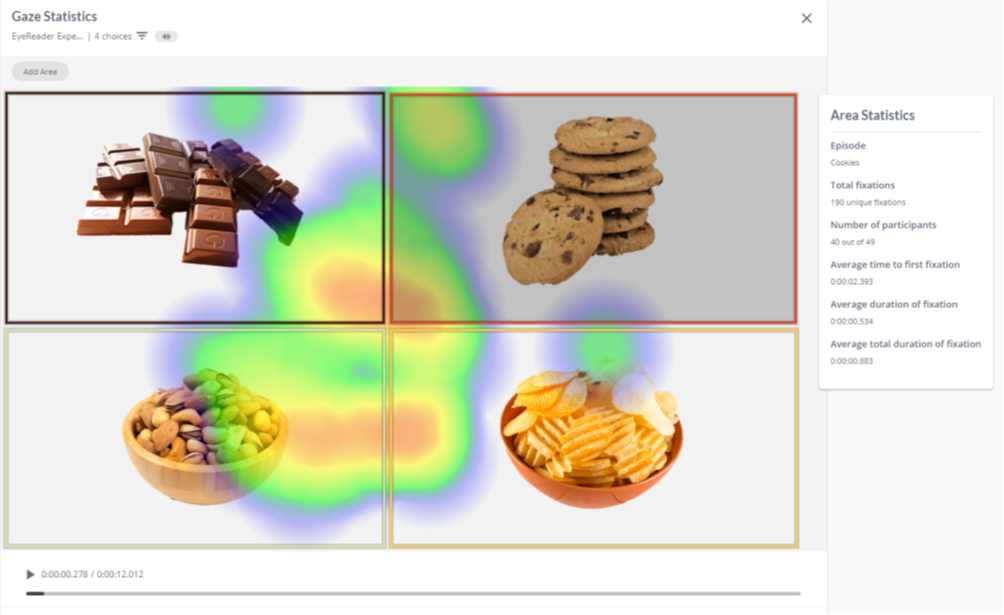

Even with the limited hardware of the Q.bo that required several additions, we managed to test the emotion recognition software in live settings. In the first pilot video data was collected of the participants in order to assess the video and emotion analysis quality. In a few participants the analysis was not possible due to the face not appearing completely in the focus field, however on average 98% of the frames could be analysed. Participants were asked to mimic some basic emotions to assess which could be well recognized. This was then used to determine response interactions for the robot in the unsupervised pilot.

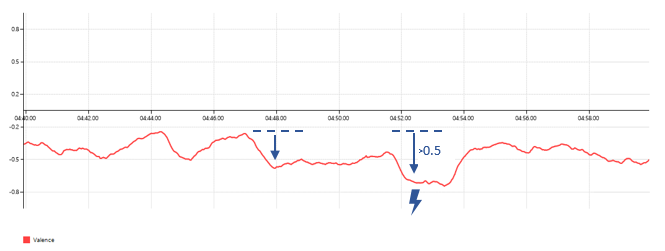

Because of the difficulty in assessing and expressing emotions in a short period, valence (happiness vs. the highest of negative emotions) was used to investigate what would be suitable trigger scenarios for the robot. In addition, individual temporal maximum and minimum scores were considered to allow for some individual calibration. These insights were then used to develop an algorithm in the second pilot that would alert certain behaviour of the robot, such as giving motivating feedback or stopping a task. In the prototype tested in the second pilot no recordings were made, but facial analysis was done live which allowed the robot to respond based on the user emotions. In general, the triggers were rated positively by most participants and had an average rating above neutral. The triggers were rated as being motivating by some, but there was a lot of variation between participants. The FaceReader output also gave more relevant insight in a post-hoc analysis: during the least enjoyed tasks users fluctuated more in their attention and showed a higher frequency of negative valence.

Future directions

We made large steps in gaining relevant insights in user requirements and developing a prototype, although there are still many developments needed. Luckily, we have received funding from the EU to do a follow-up project starting after the summer. In this 3-year project we will partner with the Buddy robot in order to truly make an empathic robot. With more improvements (better hardware, better analysis and more personalization) the robot has real potential to benefit older users. If the program is motivating and will lead to more interactions it is more likely people will change their behaviour and well-being.